How Platforms Decide What You See: Inside Ranking and Recommendation Systems

Every time you open a feed, type a query into a search box, or scroll through recommended videos, a set of invisible systems decides what becomes visible to you.

These systems are often loosely called “algorithms,” but that word hides more than it reveals. What actually shapes your digital experience is not a single algorithm, but a layered set of ranking and recommendation systems designed to sort, filter, and prioritize information at massive scale.

This article explains, in clear and non-technical terms:

- What ranking and recommendation systems are

- What inputs they use

- What goals they optimize for

- Why different platforms show different results

- Why what you see is structured, intentional — and never random

This is not a critique, and not a technical manual. It is a map of the logic behind modern visibility.

What Are Ranking and Recommendation Systems?

At a basic level, these systems answer two questions:

- What content should be considered at all?

- In what order should it be shown?

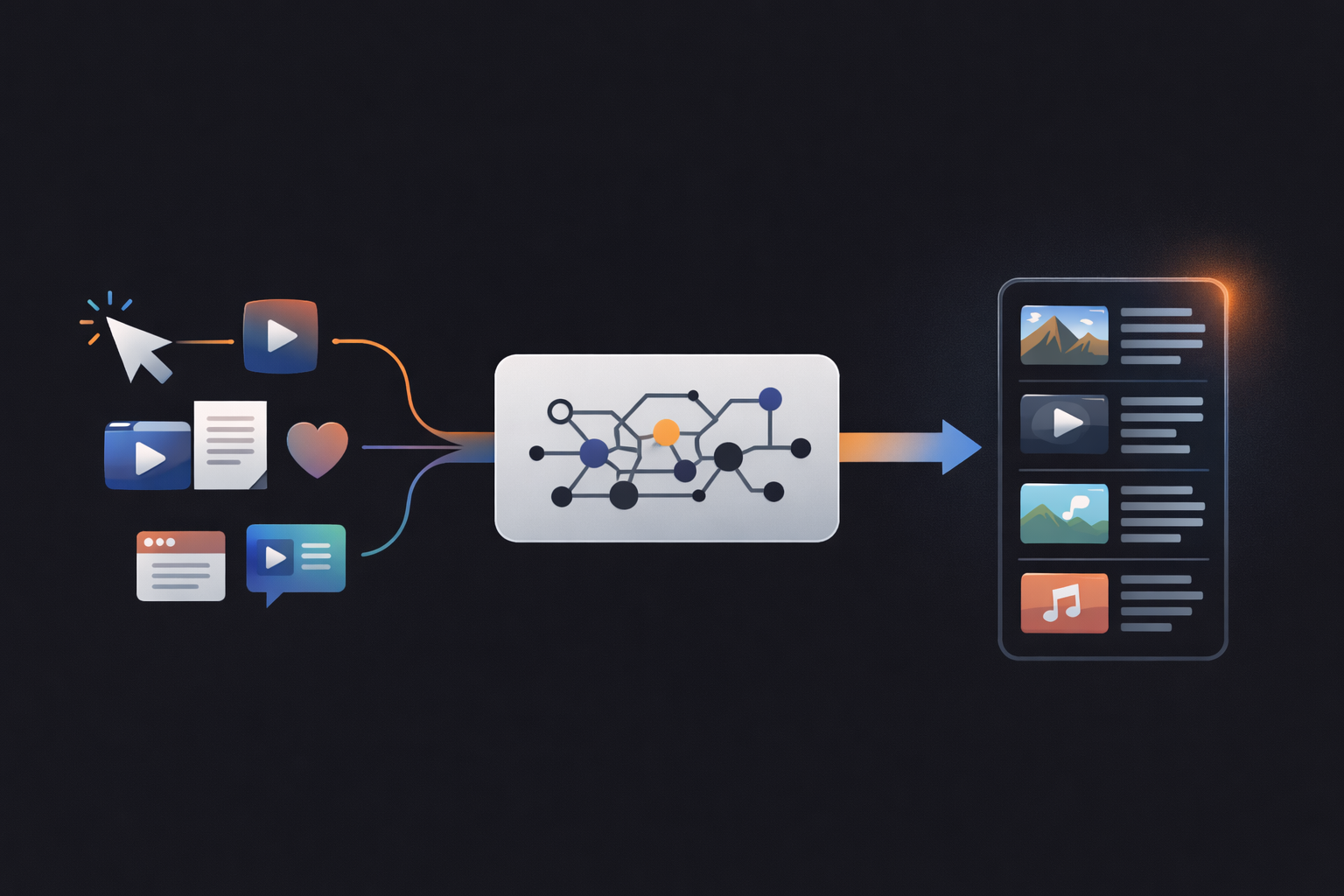

A ranking system takes a set of available items (posts, videos, articles, products, search results) and sorts them in a specific order, from most to least relevant according to some criteria.

A recommendation system goes one step further: it actively selects items it believes you are likely to engage with, even if you did not ask for them.

In practice, most platforms combine both:

- Search uses ranking (ordering results after a query).

- Feeds and “For You” pages use recommendation (choosing what to show).

- Ads systems use ranking and recommendation together.

Importantly, there is no single “algorithm.” There are pipelines: chains of filters, classifiers, scorers, and rules that progressively narrow down enormous amounts of content into a small set you actually see.

Your feed is not a window onto “everything that exists.” It is the output of a selection process.

Why Platforms Use These Systems

The core problem platforms face is not content creation — it is content overload.

Every minute, users upload:

- Millions of posts, photos, and comments

- Thousands of hours of video

- Vast streams of news, opinions, and reactions

No human can meaningfully browse that volume.

Chronological ordering worked when platforms were small. It fails at scale because:

- You miss most content simply because of timing.

- The feed becomes noisy, repetitive, and overwhelming.

- Users disengage when relevance drops.

Ranking and recommendation systems exist to manage attention. They are designed to:

- Reduce overload

- Increase perceived relevance

- Keep users engaged long enough to form habits

From a platform perspective, these systems are not optional. They are the only way large digital spaces remain usable.

What Inputs These Systems Use (Signals)

These systems do not “know” you as a person. They do not understand your beliefs, values, or intentions in any human sense.

They operate on signals — measurable traces of behavior and content properties.

User behavior signals

These describe what you do, not who you are:

- What you click

- How long you watch

- What you scroll past quickly

- What you like, share, save, or comment on

- What you ignore

These signals are probabilistic. They do not mean “you like this.” They mean “people who behaved like this often engaged with this kind of content.”

You are modeled as a pattern, not as a self.

Content signals

These describe properties of the content itself:

- Format (video, text, image, length)

- Topic and keywords

- Freshness (new vs old)

- Engagement velocity (how quickly others interact with it)

- Who created it and how their previous content performed

This allows systems to compare items even before you interact with them.

Contextual signals

These describe the situation:

- Time of day

- Device type

- Location (coarse, not precise)

- Trending topics

- Local or global events

This helps systems adapt recommendations to current conditions.

Together, these signals form the input layer. They are continuously updated, incomplete, and noisy — but sufficient for large-scale pattern matching.

What These Systems Optimize For

A crucial point: these systems do not optimize for truth, quality, or social value in any absolute sense.

They optimize for proxy goals chosen by the platform.

Typical objectives include:

- Keeping users active (retention)

- Increasing time spent on the platform

- Encouraging return visits

- Supporting advertising and revenue

- Avoiding regulatory or reputational risks

Each platform defines success differently:

- A search engine prioritizes relevance and speed.

- A video platform prioritizes watch time.

- A social platform prioritizes interaction and virality.

- A marketplace prioritizes conversion.

The system is not asking: “Is this good?”

It is asking: “Does this serve the platform’s objectives given the current user and context?”

This is not a flaw. It is the defining logic of the system.

Why Different Platforms Show Different Results

If two platforms show you different views of the world, it is because they are optimizing for different things.

For example:

- Google is optimized for query satisfaction.

- TikTok is optimized for immersive engagement.

- YouTube is optimized for session length.

- X (Twitter) is optimized for real-time interaction and discourse.

- News platforms are constrained by editorial norms and legal responsibility.

Even if they observe similar behavior, their systems interpret and act on it differently because their goals differ.

There is no neutral ranking. Every ranking expresses a choice.

Why Feeds and Search Are Never Neutral

Selection always implies exclusion.

When a system chooses what to show first, it also chooses what to hide, delay, or suppress. This does not require malicious intent. It is a structural effect of optimization.

Design decisions — such as what counts as “engagement,” how freshness is weighted, or how safety rules are applied — shape what becomes visible.

Visibility shapes perception.

Perception shapes beliefs about what is common, important, or real.

This is why ranking systems are not just technical infrastructure. They are epistemic infrastructure — systems that shape how knowledge itself is distributed.

What This Means for Society and Public Knowledge

These systems now sit between:

- Journalists and audiences

- Institutions and citizens

- Experts and the public

- Creators and culture

They influence what issues rise, what fades, and what never appears at all.

Understanding them does not mean rejecting them. It means recognizing that our shared reality is increasingly mediated by systems designed to optimize attention rather than understanding.

This does not make them evil. It makes them powerful.

And power always deserves to be understood.

Conclusion

Modern platforms do not simply host information. They organize it.

They do this through layered ranking and recommendation systems that:

- Filter overwhelming volumes of content

- Use behavioral and content signals

- Optimize for platform-defined goals

- Shape what becomes visible, when, and to whom

This process is structured, intentional, and deeply consequential.

It is not random. And it is not neutral.

Understanding this logic is the foundation for understanding everything else about digital platforms — from personalization, to misinformation, to the quiet ways technology reshapes what we collectively know.

Further reading

If you’d like to explore related aspects of this topic:

– Why Your Feed Never Looks the Same as Anyone Else’s — how personalization works in practice

– AI as a Filter: Why You See Some Things and Never Others — how filtering systems shape visibility

– How Platforms Quietly Shape Public Knowledge — how ranking systems influence what societies notice and ignore